Overview

Neocortex Vision is the robot’s perception and visual processing system—transforming raw camera feeds, depth sensors, and LiDAR into actionable visual information for teleoperation, autonomy, and research. It provides synchronized multi-modal sensor data in various formats: video streams for remote operators, fused point clouds for navigation algorithms, height scans for locomotion policies, and structured recordings for machine learning datasets.

The system distributes workloads across N100 and Jetson compute boards, adapting its processing pipeline based on operational needs—whether prioritizing low-latency streaming for teleoperation or comprehensive sensor fusion for autonomous operation.

Features

For Research & Development

Time-Synchronized Sensor Data - Access time-aligned, properly formatted sensor data to integrate custom algorithms and models:

- Position tracking from onboard SLAM for whole-body control policys

- RGB-D camera feeds for vision-language-action models

- Fused 3D point clouds for SLAM and world model research

- Height scan grids for locomotion policy development

- Multi-camera RGB streams with custom multi-camera 3A algorithm ensuring consistent exposure, white balance, and color across cameras for seamless stitching

- IMU data for sensor fusion and odometry

Pluggable AI Models - Swap or customize processing algorithms:

- Multi-camera 3A for consistent image quality

- Custom VLA models for embodied AI research

- Gaussian splatting or other 3D reconstruction methods

Core Capabilities

- Multi-sensor fusion - Combines LiDAR, ToF depth, and camera data into unified representations

- Multi-camera coordination - Synchronized capture with automated 3A across all cameras for consistent imaging

- Flexible processing modes - Configurable pipelines optimized for teleoperation, data collection, or autonomous operation

- Real-time streaming - Low-latency video encoding and transmission for remote control

Data Collection & Monitoring

- Structured recordings saved as MCAP for post-processing or RRD files for Rerun visualization

- Built-in profiling and logging across the entire vision pipeline

High-Level Architecture

The diagram below illustrates the complete Neocortex Vision pipeline—from raw sensor inputs at the top, through processing stages in the middle, to final outputs consumed by teleoperation interfaces and autonomous algorithms at the bottom.

Key data flows:

Teleoperation Path (left side): Raw camera feeds pass through video frame stitching to create immersive first-person and third-person views, which are rendered and streamed to the teleoperation UI for remote control.

Autonomy Path (right side): ToF sensors and LiDAR generate depth data and point clouds that are fused into a unified 3D representation. This feeds both the environment map for SLAM-based navigation and the height scan grid for locomotion policies.

Model Integration (center-left): The pipeline supports pluggable AI models—including Asimov’s multi-camera 3A algorithm, swappable VLAs, and custom user models—that can consume synchronized sensor data for research and deployment.

Legend:

- Dark gray nodes: Hardware sensors (Camera Array, ToF, LiDAR, IMU)

- Blue nodes: Final outputs consumed by applications (Teleoperation UI, Locomotion policy, Navigation)

- Teal nodes with dashed borders: Pluggable AI models (can be swapped or customized)

- Black nodes: Internal processing stages

- Solid arrows: Main data flow

- Dashed arrows: Optional or feedback connections

Available Data Formats

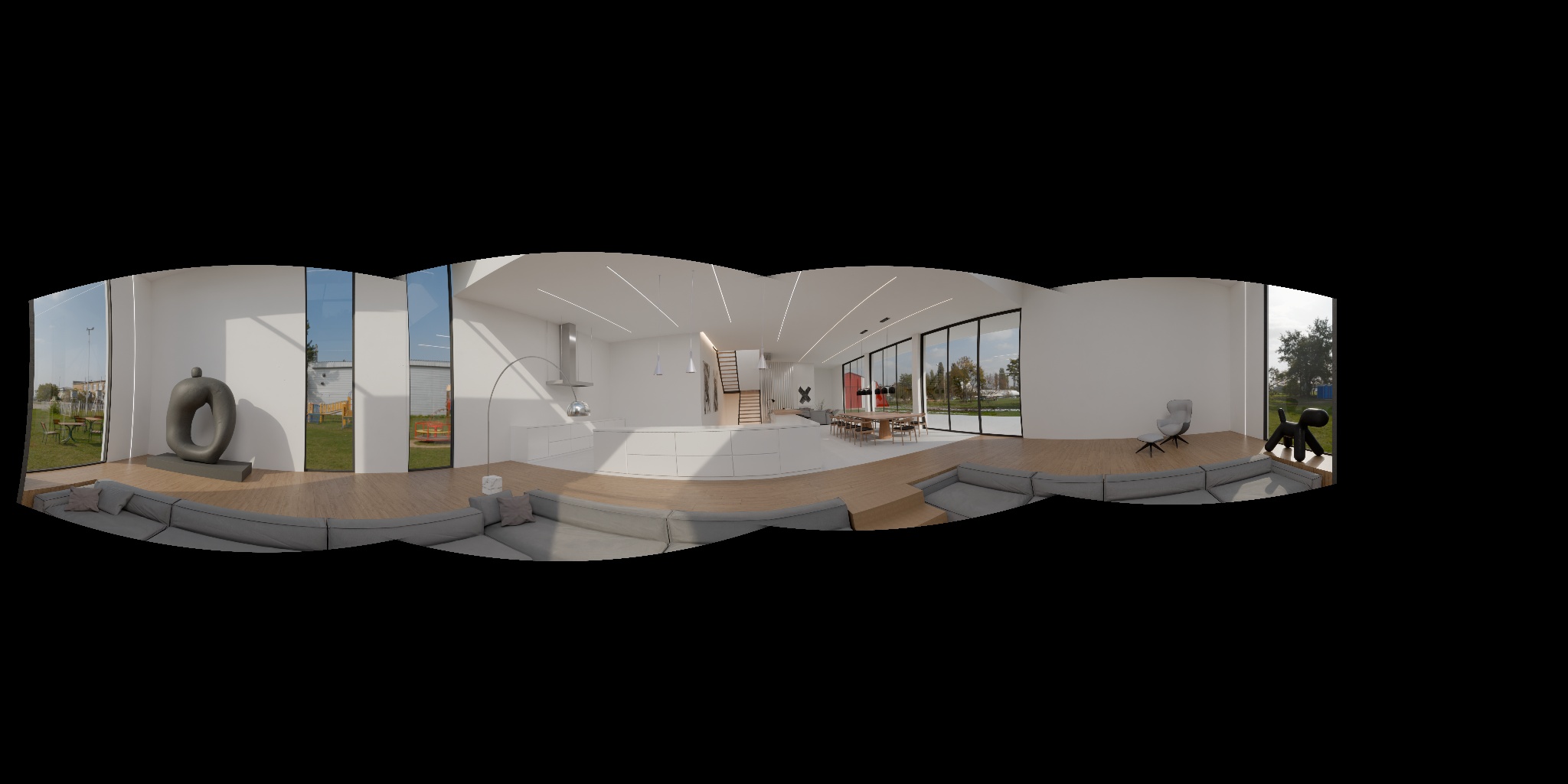

First-Person View - 180°

- Source: 2 main cameras

- What it provides: ~2:1 aspect ratio video stream that roughly corresponds to the robot’s left and right eye field of view at 30 FPS

- How to access: RTP video stream

- Processed on: N100 compute board using Vulkan

First-Person View - 360°

- Source: 2 main cameras + 4 secondary cameras

- What it provides: Equirectangular video covering close to 360° × 100° field of view at 30 FPS

- How to access: RTP video stream

- Processed on: Jetson compute board using CUDA pipeline (or PyTorch)

Third-Person View

Synthetic third-person view for better situational awareness during teleoperation.

- Source: First-Person View - 360°, Fused Point Cloud

- What it provides: Third-person view video stream built from rendered 3D reconstruction of the surroundings at 10 FPS

- How to access: RTP video stream, or Protobuf message via RML API

Fused Point Cloud

- Source: LiDAR point cloud, ToF depth map, known camera poses

- What it provides: Open3D compatible representation that combines 3D point clouds and depth information from different sensors covering different angles

- Processed on: Jetson compute board (point cloud registration and noise filtering)

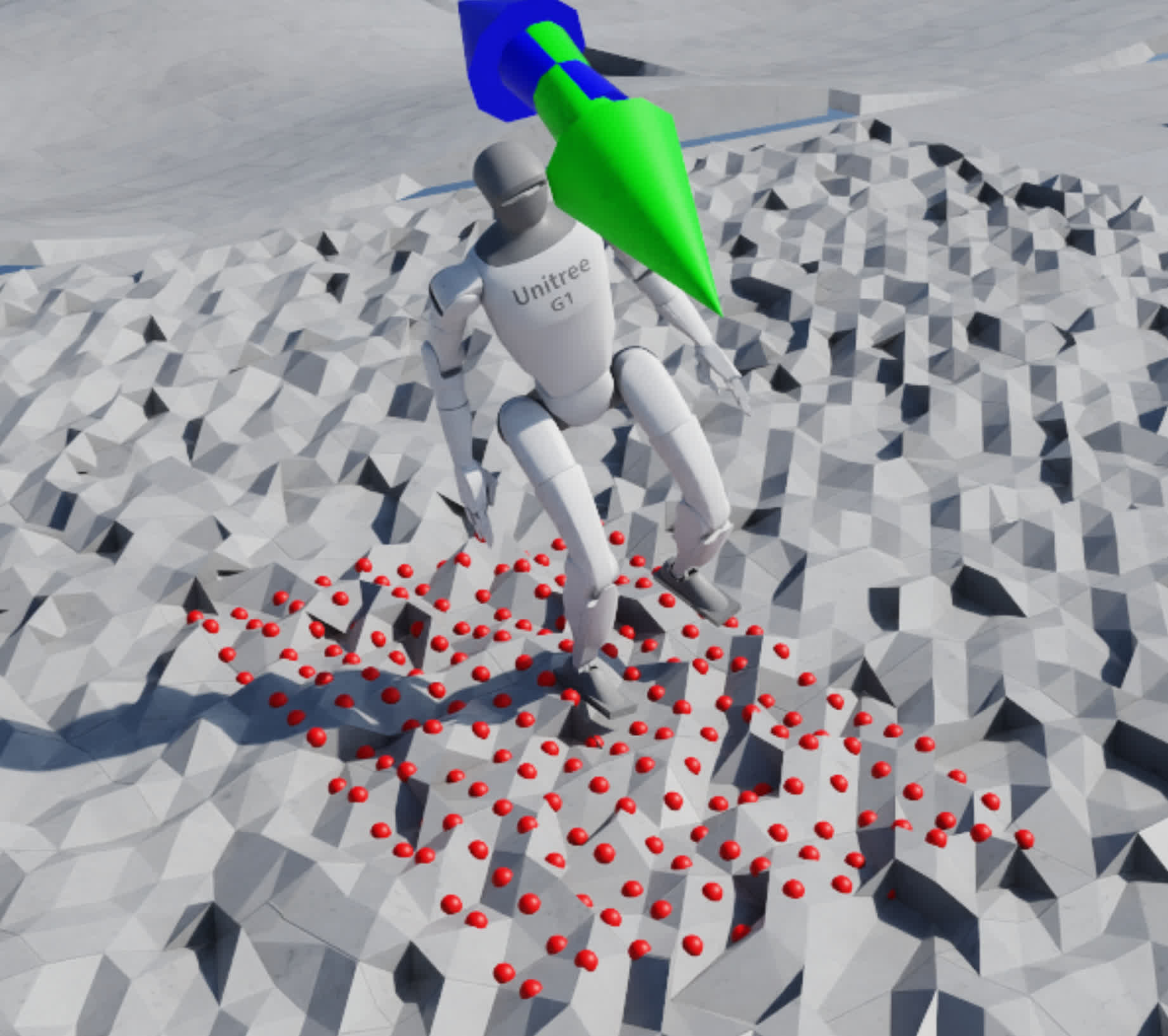

Height Map

Grid-shaped height map commonly used for locomotion policy.

- Source: Fused Point Cloud

- What it provides: 2D height map with 10cm resolution (adjustable) covering 2m x 1m area around the robot, updated at 10 FPS

- How to access: Protobuf message via RML API

- Processed on: Jetson compute board (resampling algorithm)

Raw ToF Depth Map

- Source: ToF depth camera

- What it provides: 640x480 depth image at 10 FPS with default hardware

- How to access: Protobuf message via RML API

- Processed on: Jetson compute board (denoising and hole filling)

Raw LiDAR Point Cloud

- Source: LiDAR

- What it provides: Point cloud at 10-20 FPS depending on the LiDAR model

- How to access: Protobuf message via RML API

- Processed on: Jetson compute board (filtering and downsampling)

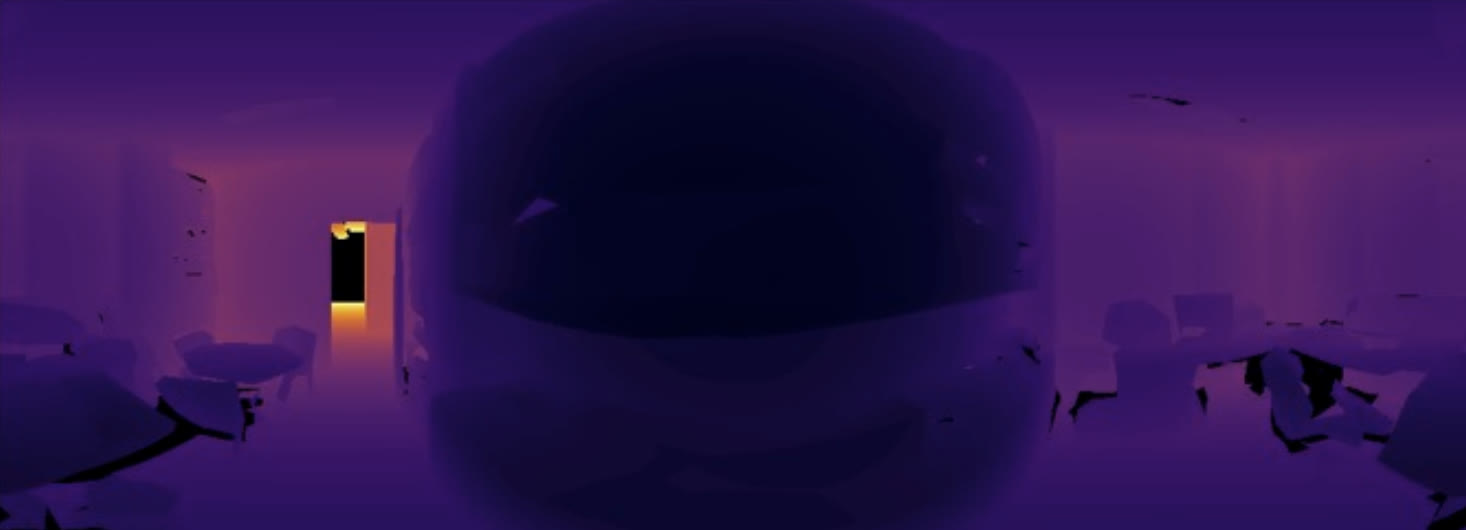

Equirectangular Depth Map

High resolution depth map covering close to 360° × 100° field of view.

- Source: First-Person View - 360°, Fused Point Cloud

- What it provides: Equirectangular depth image at 10 FPS

- How to access: Protobuf message via RML API

- Processed on: Jetson compute board (depth completion model)

Operation Modes

Due to computational power and I/O bandwidth limitations, not all algorithms for processing sensor data can run simultaneously. Therefore, we divide all neocortex-vision functionality into 3 use cases:

- Teleop (real-time vision)

- Data collection

- Semi-autonomous/autonomous (machine vision)

Teleop Mode

- Use video frames from all cameras to create Third-Person View

- Use video frames from all cameras to create First-Person View - 180°/360°

- Encode stitched frames for streaming

- Process IMU data for orientation (raw data not required)

Data Collection Mode

- Capture raw frames (2 main cameras) for basic teleoperation

- Record and store all sensor inputs onboard

- Disable all data processing algorithms except those essential for locomotion policy

Semi-autonomous/Autonomous Mode

- Disable teleoperation-only data such as Third-Person View and First-Person View - 180°/360°

- Enable 3D-related input and data processing algorithms

Custom Modes

Users can create custom modes by selecting specific algorithms and data processing tasks based on their requirements. There is no guarantee that all algorithms will run smoothly together; this will require some experimentation on the user’s part.

Data Availability

| Data | Teleop | Data Collection | Autonomous |

|---|---|---|---|

| Robot Position | ✓ | ✓ | |

| First-Person View | ✓ | ||

| Third-Person View | ✓ | ||

| Main Camera Feed | ✓ | ✓ | ✓ |

| Secondary Camera Feed | ✓ | ✓ | |

| Fused Point Cloud | ✓ | ✓ | |

| ToF Depth Image | ✓ | ✓ | |

| Equirectangular Video | ✓ |

Algorithm Availability

Which algorithms can be enabled for each operation mode:

| Algorithm | Teleop | Data Collection | Autonomous |

|---|---|---|---|

| Point Cloud to Height Scan | ✓ | ✓ | ✓ |

| Multi-Sensor Point Cloud Fusion | ✓ | ✓ | |

| Multi-Camera 3A | ✓ | ✓ | ✓ |

| First-Person View Stitching | ✓ | ✓ | |

| Equirectangular Depth Model | ✓ | ||

| Equirectangular Stitching | ✓ | ||

| Third-Person View Rendering | ✓ |