Our Mission

Menlo-ROS is the middleware spine for modern robots: a lean, deterministic layer that sits between cloud applications and robot SDKs. Built primarily in C/C++ and optimized for embedded Linux, it replaces ad-hoc stacks with a single, predictable control plane for operation, communication, and scale. Where ROS/DDS emphasize flexibility, Menlo-ROS is opinionated by design: it prioritizes timing guarantees, energy efficiency, and native observability so fleets behave the same way—from one robot to a billion. Our mission is to design Menlo-ROS from the first principles of embedded engineering: minimizing indirection in software and eliminating unnecessary CPU cycles. In a future where 8 billion humanoid robots could be powered in homes, workplaces, and cities, every watt saved and every guarantee of determinism matters.

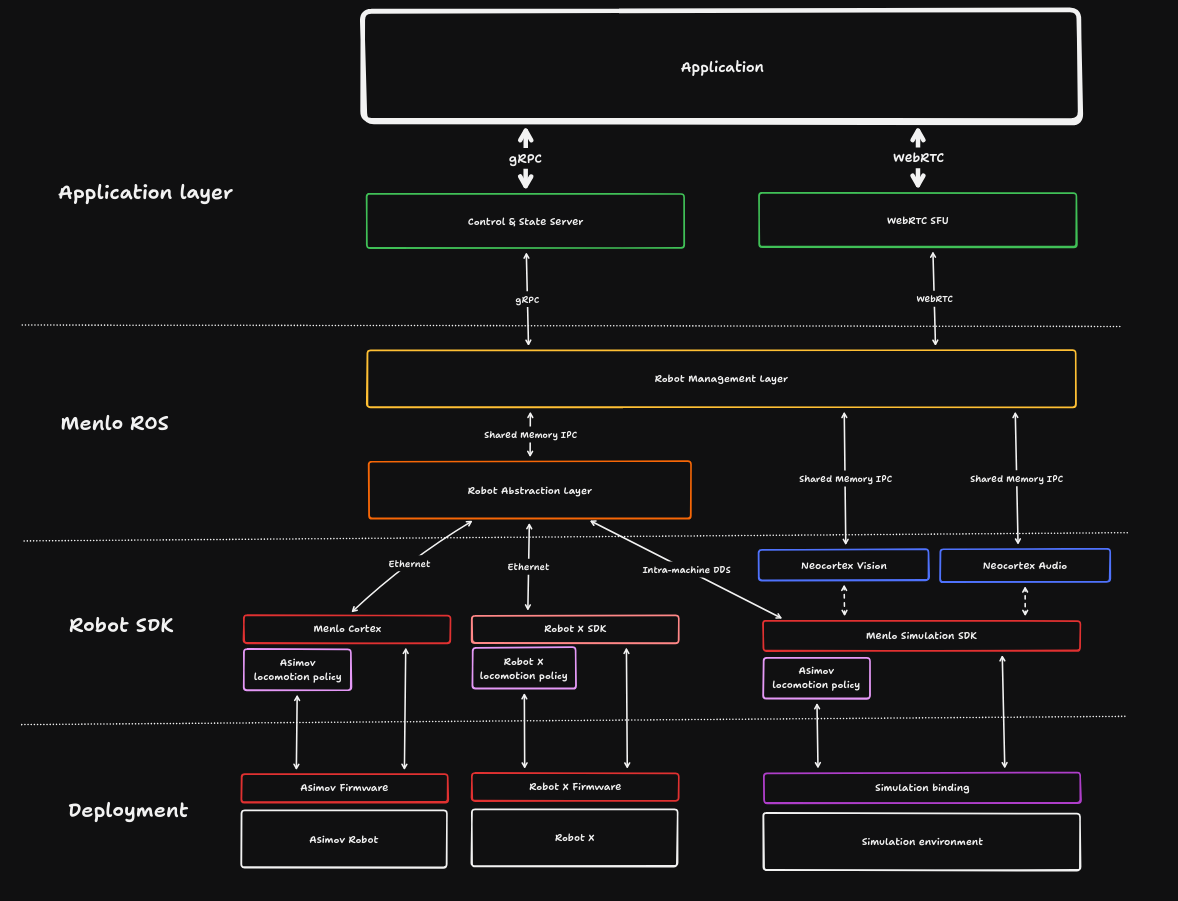

Architecture

Menlo-ROS is the middleware spine of the system. It lives between high-level cloud applications and low-level robot SDKs, enforcing consistency, determinism, and observability across all platforms.

1. Application Layer (Top)

Applications never talk to robot SDKs directly. Instead, they interact through two stable, cloud-native entry points:

- Control & State Server (gRPC) – bidirectional commands and telemetry

- WebRTC SFU – real-time audio/video/media streaming

2. Menlo-ROS (Middle)

This is where abstraction and management happen. Menlo-ROS is composed of:

-

Robot Management Layer (RML) – the robot’s cloud gateway:

- WebRTC packaging of media from Neocortex Vision/Audio

- Prometheus telemetry push for 1000s of metrics

- Command validation and safe execution

- Alert aggregation and forwarding

-

Robot Abstraction Layer (RAL) – the universal translator:

- Detects and binds to connected robots automatically

- Normalizes CAN, EtherCAT, DDS, or shared-memory protocols

- Maintains a consistent API contract (init, state, command, cleanup)

- Guarantees 1 kHz real-time loops on RTLinux

-

Neocortex Modules – specialized, high-performance components that plug into RML via shared memory:

- Vision (camera capture, encode, preprocessing)

- Audio (microphone input, echo cancellation)

Together, RML + RAL turn a messy world of vendor SDKs and hardware quirks into one deterministic, efficient control plane.

3. Robot SDKs (Bottom)

Beneath Menlo-ROS are the vendor-specific SDKs:

- Asimov SDK – with its locomotion policies and firmware

- Other Robot SDKs – Unitree, Realman, etc.

- Simulation SDKs – MuJoCo, Isaac, or custom simulation bindings

These SDKs speak in their own dialects, but Menlo-ROS absorbs those differences. Applications remain unaware of whether they are talking to a physical robot, a simulator, or a new vendor SDK.

4. Deployment Layer (Physical Robots & Simulation)

Finally, the SDKs interface with:

- Firmware + Hardware – the deployed robots (Asimov, Robot X, etc.)

- Simulation Environments – binding into MuJoCo, Isaac Sim, or other physics engines

This is the only part of the stack that is vendor-specific. Everything above is universal.

Why This Matters

By positioning Menlo-ROS squarely between applications and SDKs:

- Applications stay clean – always using the same gRPC/WebRTC APIs

- Robots stay swappable – SDKs can be changed without touching application code

- Performance stays deterministic – shared memory IPC, static allocation, RTLinux scheduling

- Operations stay scalable – Prometheus, WebRTC, and gRPC are proven at cloud scale