Robot Abstraction Layer (RAL)

Overview

The Robot Abstraction Layer (RAL) sits between high-level intelligence and low-level hardware. It provides a stable, hardware-agnostic interface so Menlo-ROS behaves identically across robot platforms, simulators, and transports. Upstream, a Unitree G1, a Realman manipulator, or a MuJoCo simulation all look the same.

What the interface is:

- Universal schema: a standardized state/command layout exported via shared memory.

- In-process API: a lightweight C API to read state and submit commands on deterministic schedules.

- Primary client: RML (Robot Management Layer) uses this interface; other in-process components may as well. This is not a network API.

Real-time baseline:

- RAL’s guarantees assume Linux is configured for PREEMPT_RT (or mainline RT-preempt), which makes most kernel sections preemptible and interrupts threaded. This enables bounded latencies, CPU affinity, and predictable 1 kHz control loops.

One Interface, Any Robot

RAL achieves hardware independence with a clear four-step flow.

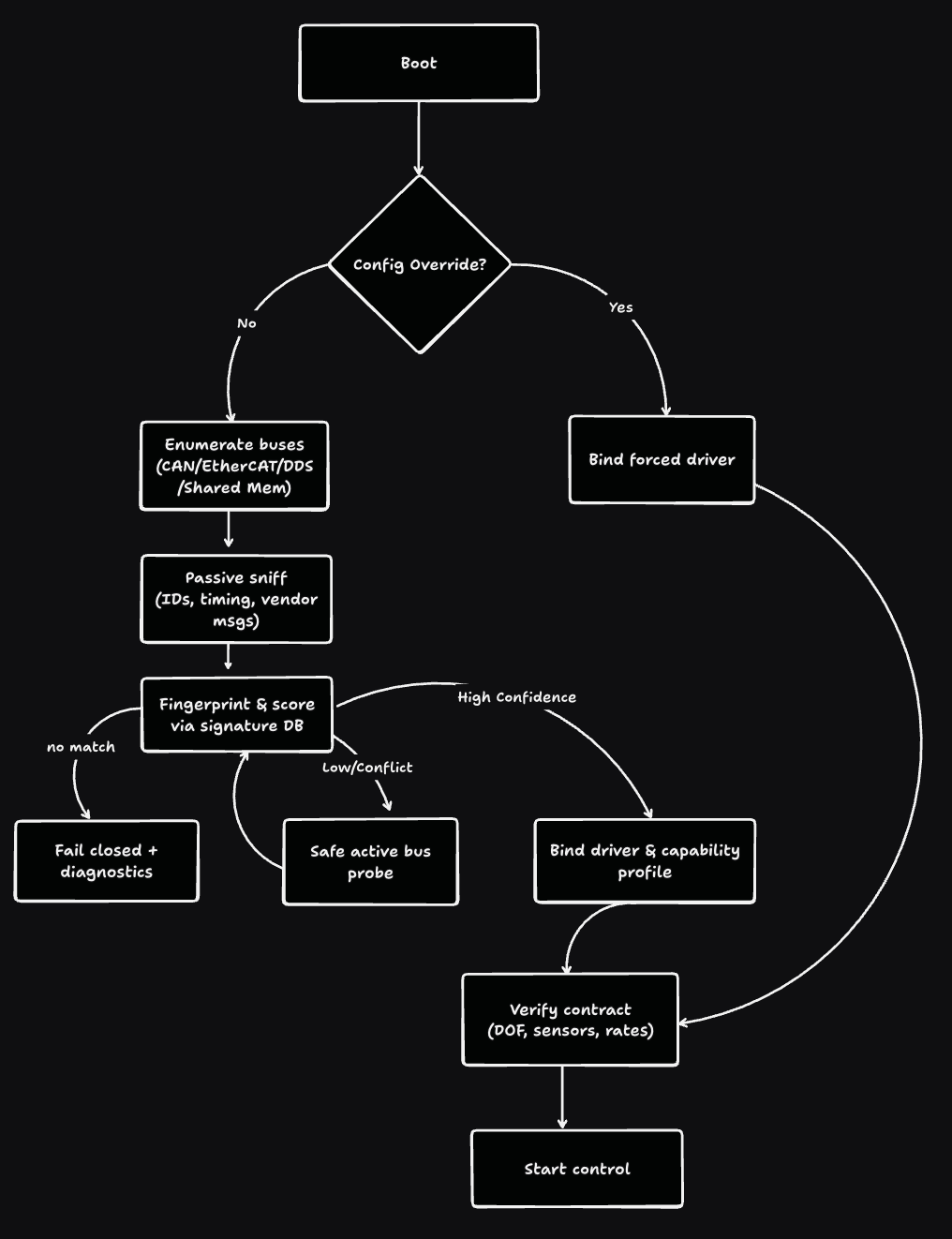

Step 1: Automatic Robot Detection

Within ~200 ms at boot, RAL identifies the connected robot—whether a Unitree humanoid (CAN), a UR arm (EtherCAT), or a MuJoCo sim (shared memory). It does so by:

- Passive fingerprinting (bus traffic patterns, IDs, timing)

- Protocol signatures (vendor heartbeats/announcements)

- Safe active probes (only if needed)

- Config override (operator-forced selection)

Detection runs once, on static memory, and fails closed if confidence is low.

Step 2: Protocol Mapping

After identification, native protocols are mapped to RAL’s universal schema. CAN, EtherCAT, DDS, or shared memory details stay below the line; upstream only sees one interface.

Step 3: State Normalization

All robot state is normalized into a single representation:

- Units (e.g., degrees → radians), frames, and conventions

- Per-joint position, velocity, torque, temperature

- IMU/FT and base state aligned to the same coordinate frames

Step 4: Command Execution

Commands follow the mirror path: from the universal schema through translation to the hardware. RAL validates and clamps against per-robot capabilities and delivers at deterministic time steps.

Robot Detection: Implementation

Fingerprints include (examples):

- Unitree: CAN arbitration IDs, heartbeat frequency

- UR/Realman: EtherCAT slave maps, vendor IDs

- Simulators: shared-memory headers and timing patterns

- New robots: add signatures and a capability profile; no redesign required

Failure Handling & Degradation

- Detect-time failure: No confident match → do not start control. Emit a structured diagnostic and metrics for remote triage.

- Ambiguity: Multiple candidates → safe probe once; if still ambiguous, fail closed.

- Run-time link loss (bus down, stale state, timing violation): Enter safe mode (hold/idle/brake as appropriate), signal watchdog, and require an explicit resume handshake.

- Partial capability (missing sensor/joint): Start in degraded mode with explicit flags; never silently assume presence.

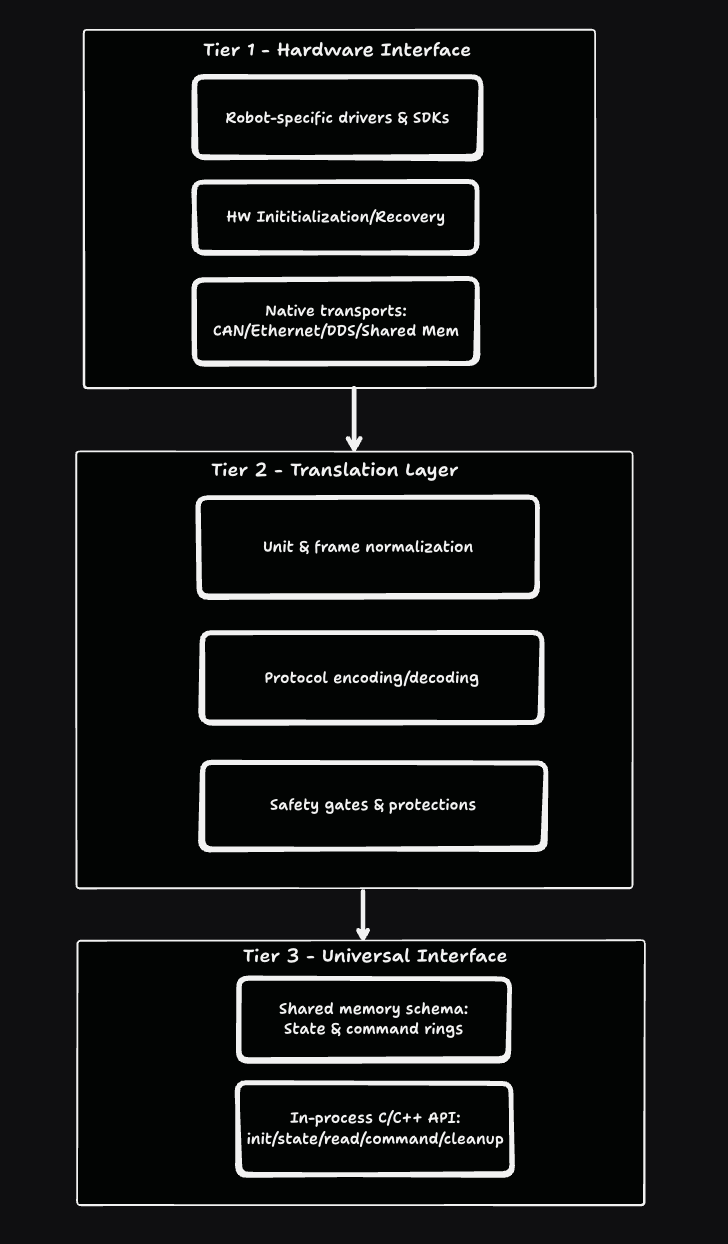

Three-Tier Abstraction Model

Tier 3 — Universal Interface (RAL export)

What RAL exposes: a shared-memory schema (state/commands) and a C API for deterministic access. RML is a client of this interface; Tier-3 describes RAL’s boundary, not RML’s responsibilities.

Tier 2 — Translation Layer

Maps between hardware specifics and the universal schema: units/frames, protocol codec, safety/clamping, and timing alignment.

Tier 1 — Hardware Interface

Vendor SDKs and low-level drivers. Interrupt handling, and native protocol implementations live here.

This universal interface design ensures that whether you’re controlling a humanoid or a simple arm, the upstream systems see identical contracts and behavior.